– To establish communication non-verbal communication between people and robots –

Imagine that you are in a restaurant and ready to order. All you have to do to call a waiter is to make eye contact with him and raise your hand when he looks in the direction of your table, instead of saying: “Excuse me”. These non-linguistic behaviors (non-verbal behaviors), including expressions, play important roles in human communication. Many different robots are expected to help and support humans in various ways in our daily lives, and they have to be easy to use. These robots are required to be as easy-to-communicate-with as people.

In this context, we have been conducting research to incorporate non-verbal communication ability, which facilitates smooth communication among people, into robots.

However, researchers involved in the development of robots do not have adequate knowledge regarding what type of non-verbal behavior is required in what situation. Therefore, we have been conducting joint research with Professor Keiichi Yamazaki of the Faculty of Liberal Arts of Saitama University – a specialist in ethnomethodology, which is an academic discipline related to sociology to examine human behaviors. In our joint research, scenes of communication among people in actual settings are first videotaped, and sociological analyses of the videos are conducted to examine the use of verbal and non-verbal behaviors by people during communication. We then develop robots based on the findings. Following this, communication between the developed robots and people will be analyzed based a sociological approach to evaluate the robots and discuss their problems.

However, since there are many different situations for communication and various patterns of non-verbal human behaviors, it is difficult to develop robots that can respond to all of them. Therefore, our research aims to develop robots dedicated to responding to specific situations, or communication to perform specific tasks such as responding to requests and providing explanations. Types of non-verbal behavior that play important roles in each specific situation are also discussed. The results of these studies are integrated to develop robots with the ability to communicate by utilizing non-verbal behaviors appropriately according to the situation – the ultimate goal for our research.

However, since there are many different situations for communication and various patterns of non-verbal human behaviors, it is difficult to develop robots that can respond to all of them. Therefore, our research aims to develop robots dedicated to responding to specific situations, or communication to perform specific tasks such as responding to requests and providing explanations. Types of non-verbal behavior that play important roles in each specific situation are also discussed. The results of these studies are integrated to develop robots with the ability to communicate by utilizing non-verbal behaviors appropriately according to the situation – the ultimate goal for our research.

We have already developed a museum guide robot, which looks and sounds so real that the visitors cannot help but nod to it, a nursing care robot with friendliness to which people can ask for anything, and a wheelchair robot designed to move along with the person accompanying the patient sitting in it according to the situation.

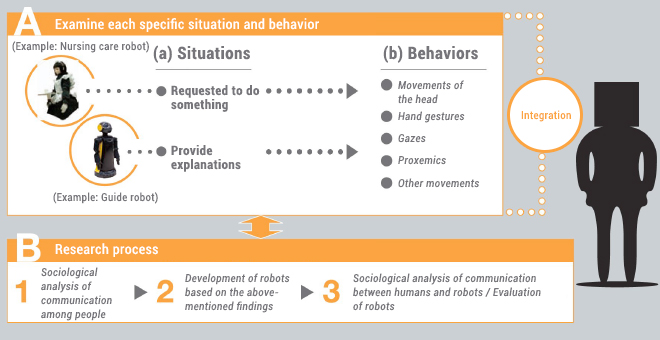

In our research, all types of robots, as shown in [A], are developed according to the process consisting of three stages presented in [B]. In Section A, situations that require communication are analyzed, using appropriate robots as subjects. However, the movements of different parts of a robot are examined separately, as shown in (b), since it is difficult to analyze the behavior of a robot as a whole at one time. The results of these individual studies are integrated to develop robots that can properly communicate with people according to the situation.

In our research, all types of robots, as shown in [A], are developed according to the process consisting of three stages presented in [B]. In Section A, situations that require communication are analyzed, using appropriate robots as subjects. However, the movements of different parts of a robot are examined separately, as shown in (b), since it is difficult to analyze the behavior of a robot as a whole at one time. The results of these individual studies are integrated to develop robots that can properly communicate with people according to the situation.

Professor, Division of Mathematics, Electronics and Informatics, Graduate School of Science and Engineering, Saitama University

|

1982 |

Graduation from the doctoral course of the Department of Electronics Engineering, Graduate School of Engineering, the University of Tokyo. Entered Toshiba Corporation. |

|---|---|

|

1987 – 1988 |

Visiting Researcher, School of Computer Science, Carnegie Mellon University |

|

1993 |

Associate Professor, Department of Computer-Controlled Machinery, School of Engineering, Osaka University |

|

2000 |

Professor, Department of Information and Computer Sciences , Faculty of Engineering, Saitama University |

Museum Guide Robot

Museum Guide Robot“Explaining something to people” is an important function of a robot. We have been conducting research on this function through the development of robots designed to guide the visitors around museums. As the first step, an analysis is conducted to understand “how skilled staff provide visitors with explanations in real museums and galleries”. When explaining something, the speaker often turns his/her head to the listeners. The listeners also look at or nod to the speaker in return. The speaker and listeners look at or nod to each other to confirm that they have been understood. In other words, these actions are tools for smooth communication.

When, or in what situation, do speakers turn their head to listeners? According to the

results of an analysis of the behaviors of staff working in museums, they looked at the listeners at the end of a meaningful unit such as the end of a sentence – Transition Relevance Places (TRPs), a term used for conversation analyses in sociology.

We developed a robot equipped with a video camera to track the face of a conversation partner and turn to the person at TRP points. In an experiment conducted in Ohara Museum of ART, Kurashiki City, in which the robot provided explanations of a painting of Gauguin, the visitors often nodded when the robot turned its face to them, as they did when they were in conversation with human staff.

Robots to Attract Attention

Robots to Attract AttentionWhen a robot wants people to look at something, it first needs to attract their attention to itself non-verbally. Research is currently in progress to develop such a technology required for all communication robots.

The robot used in our experiments can shift its gaze because the images of the eyes created using computer graphics have been reflected on the surface of the head area.

Nursing Care Robot

Nursing Care RobotWith the aim of developing robots to which people can easily ask to perform tasks for them, we have also been conducting research on nursing care robots. According to the results of a study conducted to examine how the elderly in nursing care facilities call nursing caregivers when it is necessary, most elderly people turned their eyes to the care staff when the caregivers looked at them.

We are developing a robot that can turn its face to a person and make eye contact when the computerized camera installed in it recognizes that the person is looking at the robot. In other words, when you want the robot to come immediately to help you, all you have do is turn your eyes to it.

Wheelchair Robot

Wheelchair RobotIt is not unusual for a wheelchair user and a person who pushes it to go out together. Sociological analysis suggests that a wheelchair user in such a situation, or when accompanied by a caregiver, tends to be regarded as a person who is dependent and “requires nursing care”. However, if the person accompanying a wheelchair user can stand and walk along side, it will be easier for them to talk and communicate with each other, and the relationship between them is expected to be closer.

To address this problem, we developed a wheelchair that usually moves in parallel with the accompanying person. However, the robot lets the accompanying person precede it when someone is coming closer to them or when the accompanying person needs to open the door to a room. Laser range sensors and omnidirectional cameras installed in the robot allow it to recognize the positions and movements of the accompanying person, passers-by, surrounding walls, and nearby objects. The robot was exhibited at International Home Care & Rehabilitation Exhibition 2010. It was also introduced in TV shows, including “N STUDIO”, an evening news program on TBS, and attracted much attention.

© Copyright Saitama University, All Rights Reserved.